Hitman 3 Benchmarks and Performance

Agent 47 is dressed up in AMD red

Hitman 3 just launched, and IO Interactive's latest stealth assassination sandbox game has garnered high ratings from many publications — PC Gamer gives it a 90, for example. But what sort of hardware do you need to run the game properly? We've grabbed a copy from the Epic Games Store, downloaded all 55GB, and set about running some benchmarks.

Anyone familiar with the past two Hitman releases will be right at home with the launcher, which includes a couple of built-in benchmarks if you go into the Options screen. The graphics options also look pretty much the same as before. There are ten main settings, plus super sampling — aka, supersampling anti-aliasing (SSAA). You should probably leave this off on most GPUs, or at most, use it very sparingly. Setting SSAA to 2.0 effectively quadruples the number of pixels the game renders before downsampling to your selected resolution, so running at 4K with SSAA at 2.0 is the same as running at 8K. If you have a 1080p display, though, it might be worth enabling. The remaining settings cover the usual gamut of texture quality, shadows, reflections, and a few other miscellaneous items. We'll discuss those in more detail below.

We did some initial testing with both the Dartmoor and Dubai benchmark sequences, ultimately opting for Dubai as it feels a bit more representative of what you'll actually experience in the game. The Dartmoor test is more demanding and features a lot of physics and particle simulations, but for a stealth game, we don't think most people are concerned with pulling out a pair of machine guns and laying waste to an empty mansion. The Dubai sequence consists of various camera angles from the game's first mission, which has NPC crowds but no explosions. Dartmoor might be useful as a worst-case view of performance, but it also stutters quite a lot during the first ten seconds while the level is still loading.

We've defined our own 'medium' and 'ultra' settings for our testing, and we test at 1080p, 1440p, and 4K on both. Medium has everything at medium where applicable, plus anisotropic filtering at 4x, variable rate shading at performance, and simulation quality at base. Ultra just maxes out all of the settings, except for super sampling, as noted above. Interestingly, there's no DirectX 11 option this time — it's all DX12, all the time. On the previous two games, DX12 did help performance on most GPUs, and it definitely helped fps at lower resolutions and settings. IOI seems ready to ditch the old DX11 option and focus solely on DX12, and as we'll see in a moment, performance hits relatively high frame rates.

Our test PC, for now, consists of a Core i9-9900K running on an MSI MEG Z390 ACE motherboard with 2x16GB Corsair DDR4-3600 CL16 memory. We've grabbed the latest AMD and Nvidia GPUs for starters, but we'll look at adding more GPUs and some additional CPU tests soon. (Updated with RTX 20-series GPUs now. More to come!)

Hitman 3 has some sort of partnership with Intel this time, but it sounds like it's focused on CPU optimizations rather than GPU enhancements. Unfortunately, the extra 'Intel sauce' doesn't show up in the settings menu, and the details of what Intel has helped with are rather vague. Eight-core and higher CPUs may show some extra details, and Xe Graphics might have a few extras as well. Based on what we've seen so far with AMD and Nvidia GPUs, the Intel collaboration might be more for future updates (Intel discrete graphics is mentioned at the end of the video, for example).

Hitman 3 Graphics Benchmarks

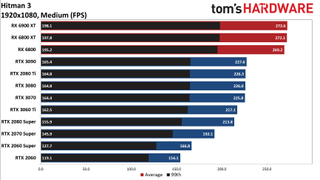

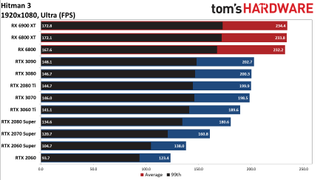

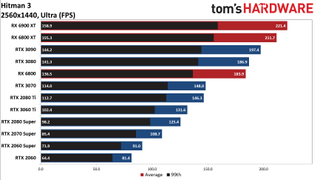

Starting at 1080p, whether you're running medium quality or ultra quality, performance isn't going to be a problem with any of the RTX 30-series or RX 6000-series graphics cards. Even the RTX 20-series cards, from the 2060 up, skate past 1080p with nary a hiccup.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Interestingly, AMD's GPUs all have a clear performance lead, despite being CPU limited. Nvidia's GPUs all max out at around 220-230 fps at medium and 190-200 fps at ultra, while AMD's GPUs hit 270 fps and 230-235 fps. AMD did release new 21.1.1 drivers that are game ready for Hitman 3, but we did a few initial tests with 20.12.1 drivers, and performance wasn't all that different (a few percent slower at most).

Regardless, we need to step up the resolution if we're going to tax these modern GPUs. We'll add additional commentary once we've tested with some mainstream and budget GPUs as well.

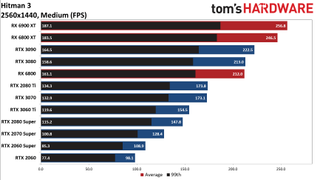

Running at 1440p, the RTX 3060 Ti still reaches 132 fps at ultra quality, so discussions about what GPUs run Hitman 3 best are still largely academic. The RTX 2060 also breaks 60 fps, sitting at 81 fps. Basically, if you have a high-end graphics card from the past two generations, you should be fine, and most of the GPUs (RTX 3070 and above) average 144 fps or more — perfect if you have one of the best gaming monitors. If if you can't quite break 144 fps, using a G-Sync or FreeSync display should smooth out the occasional stutters.

Looking at the individual cards, the RTX 3090 barely drops at all going from 1080p to 1440p, while the other GPUs lose anywhere from 5 percent (6900 XT) to 30 percent (3060 Ti) of their 1080p performance. That's pretty typical, and the drop corresponds to whether a particular setup is more CPU or more GPU limited.

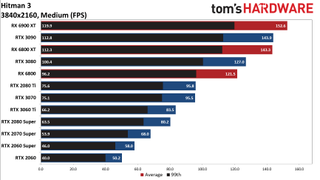

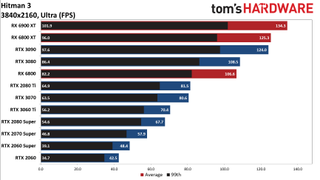

Wrapping up with 4K testing, the RTX 3060 Ti still breaks 60 fps at 4K ultra, along with the 2080 Super. Anything below that mark struggles a bit, though even the RTX 2060 is still playable at 42 fps. Based on what we've seen so far, Hitman 3 isn't nearly as demanding as some other games — at least, not in this initial release.

Meanwhile, the AMD vs. Nvidia comparisons continue to favor AMD by quite a lot, at least on the latest generation GPUs. Well, they're favored based on the theory that the suggested prices on the various GPUs are anything remotely close to reality (which they're not right now). Nvidia narrows the gap at 4K, but the RX 6800 XT is still faster than the RTX 3090, and the RX 6800 is nipping at the heels of the RTX 3080 and easily stays ahead of the RTX 3070.

We'll be running some GTX 16-series and RX 5000-series benchmarks next to see how last-gen GPUs stack up in the near future. Based on what we've seen so far, Hitman 3 isn't nearly as demanding as some other games — at least, not in this initial release.

Hitman 3: Future Updates Planned

That last bit is important. Hitman 3 is already a very nice looking game, but IOI plans to update the game with additional features, including ray tracing support, in the coming months. However, it's not clear when the DirectX Raytracing (DXR) update will arrive — or if it will even make the game look all that different.

One of the big benefits of ray tracing is the ability to do "proper" reflections and lighting. Most games fake shadows, reflections, and other elements using various graphics techniques, but Hitman 3 goes beyond straight SSR (screen space reflections) in some areas. For example, it was a nice surprise to see Agent 47 and his surroundings properly reflected in building windows right at the start of the first level. Bathroom mirrors also work properly. But other reflective surfaces only do SSR, meaning they can only reflect what's visible on the screen.

There are some compromises with the mirrored reflections, though. They appear to run at half the target resolution, which means there's a lot of aliasing present. That's a bit odd, as it shouldn't be hard to run an AA post-processing filter to improve the look. Still, after seeing so many reflective surfaces in other games that don't accurately model things (I'm looking at you, Cyberpunk 2077, with your lack of V reflections), at least Hitman 3 attempts to bridge the gap between SSR and full ray traced reflections.

The thing is, Hitman 3 already looks good right now, and if the choice comes down to improved reflections and shadows but performance plummets, most people will be happier with higher fps. Or maybe IOI is waiting for Intel's high-end Xe HPG solution that will also support ray tracing, in which case it might be late 2021 before we get the patch. For now, the game runs well and looks very pretty. If your PC could handle the previous two Hitman games, it should still be fine for this third chapter that concludes the current story arc.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

UWguy Supposedly they are adding ray tracing at a later date. Please re-benchmark and let us know if he is still wearing red then. Thanks.Reply -

Math Geek usually enjoy these benchmarks articles, but to only include cards that can't be bought makes it lose some of the fun of it :)Reply

maybe a mid-range and budget card would be useful as well to see if someone who did not spend $1500+ for their pc can play the game!! -

TCA_ChinChin Game runs a lot better on Nvidia GPUs: Perfectly normal, no need to call it out. Happens all the time.Reply

Game runs a lot better on AMD GPUs: "Agent 47 is dressed up in AMD red". -

benderman I just registered to call out this article big BS right there. Just check other outlets, the AMD cards are 3-5% behind nvidia. Just as an example, look at Computerbase for the same resolutions. Not sure what you guys did but AMD cards aren't hitting 200+ fps anywhere.Reply -

MasterMadBones Reply

Waiting for Jarred to confirm this, but AMD released a driver update that's supposed to boost performance in Hitman 3 by up to 10%. There may be a discrepancy in the driver version used by other outlets.benderman said:I just registered to call out this article big BS right there. Just check other outlets, the AMD cards are 3-5% behind nvidia. Just as an example, look at Computerbase for the same resolutions. Not sure what you guys did but AMD cards aren't hitting 200+ fps anywhere.

Edit: it also seems that I can't read. -

JarredWaltonGPU Reply

Gee, it couldn't possibly be because I used a different CPU, could it? Not sure which test sequence was used there either. But considering it's Intel promoted, yeah, Core i9-9900K might actually beat Ryzen 9 5950X. It happens.benderman said:I just registered to call out this article big BS right there. Just check other outlets, the AMD cards are 3-5% behind nvidia. Just as an example, look at Computerbase for the same resolutions. Not sure what you guys did but AMD cards aren't hitting 200+ fps anywhere. -

vilor Replybenderman said:I just registered to call out this article big BS right there. Just check other outlets, the AMD cards are 3-5% behind nvidia. Just as an example, look at Computerbase for the same resolutions. Not sure what you guys did but AMD cards aren't hitting 200+ fps anywhere.

Guru3d also has Amd winning this game. 5950X cpu. also 6800 is virtually a tie with 3080 same as here.

Maybe computerbase article is wrong... what other articles you found out?

You said Computerbase was just an example care to through another?

Maybe you got angry for another reason and had to make an account to say this instead of checking more articles. -

benderman Reply

It rather seems to me that some of these tests try to pander to a certain audience. Guru3D has only 10-15fps advantage for AMD at 1080p, not 30 fps like in this test. It also seems to me that the performance comparison is heavily dependent on the scene. Computerbase does not use the Dubai nor the Dartmoor scenes and Nvidia comes out ahead.vilor said:Guru3d also has Amd winning this game. 5950X cpu. also 6800 is virtually a tie with 3080 same as here.

Maybe computerbase article is wrong... what other articles you found out?

You said Computerbase was just an example care to through another?

Maybe you got angry for another reason and had to make an account to say this instead of checking more articles.

There's absolute no logic a 6800 XT being 30 fps ahead of 3090.

The other tests I was referring to were videos all over Youtube. I don't see any huge gap in performance like here. -

JarredWaltonGPU Reply

Here's the logic:benderman said:It rather seems to me that some of these tests try to pander to a certain audience. Guru3D has only 10-15fps advantage for AMD at 1080p, not 30 fps like in this test. It also seems to me that the performance comparison is heavily dependent on the scene. Computerbase does not use the Dubai nor the Dartmoor scenes and Nvidia comes out ahead.

There's absolute no logic a 6800 XT being 30 fps ahead of 3090.

The other tests I was referring to were videos all over Youtube. I don't see any huge gap in performance like here.

AMD has traditionally good DX12 performance. Navi 21 has a 128MB L3 "Infinity Cache" that some games really respond well to. The built-in Dubai benchmark may not be as demanding as other areas of the game. If you play in an area that has more NPC stuff going on, you will become more CPU limited, which in turn means you're less likely to see big differences between the GPUs. You're also looking at the 1080p results, which is the best-case scenario for AMD's large cache. Most places probably didn't run at medium and ultra at 1080p/1440p/4K on all of the GPUs. The fastest GPUs will all hit CPU bottlenecks at 1080p, which means drivers and other factors come into play.

Look at GPU limited resolutions (mostly 4K in this game) and the 3090 is basically tied with the 6800 XT. There are other games where we see similar results. Assassin's Creed Valhalla for sure, Borderlands 3 at 1440p, and Forza Horizon 4 are all examples of AMD's 6900 XT leading the 3090. New drivers could improve Nvidia's standings. Game patches could do that as well. Maybe in the game itself (rather than built-in benchmark) Nvidia does better. Investigating every potential factor takes time, though.

These are early results, and they're valid for what they show. Are they universally applicable to the game as a whole? I don't know yet, but in general it isn't even a major factor. You can't buy these cards, and they're all running at well over 60 fps even at 4K and max settings -- in a relatively slower paced stealth game.

Most Popular